Compliance is not protection

Jason Makansi, Pearl Street Inc

Last August, 30,000 disc drives, representing 75% of the workstations of the petrochemical giant, Saudi Aramco, were hacked with a malicious virus called Shamoon. An image of a burning American flag replaced critical data on these machines. In the fall, according to a Dept of Homeland Security (DHS) report, control systems at two power stations were infected by malware delivered through USB drives. One of the plants was down for three weeks before it could restart.

Far more innocuously, while visiting a major utility not long ago, I gave my host a memory stick so he could copy some photos for me. Later, I caught myself: Aren’t we all supposed to be more careful? The last time I let a colleague insert a memory stick into my office PC USB port, my operating system froze up. Neither of these involved a powerplant control console, but still.

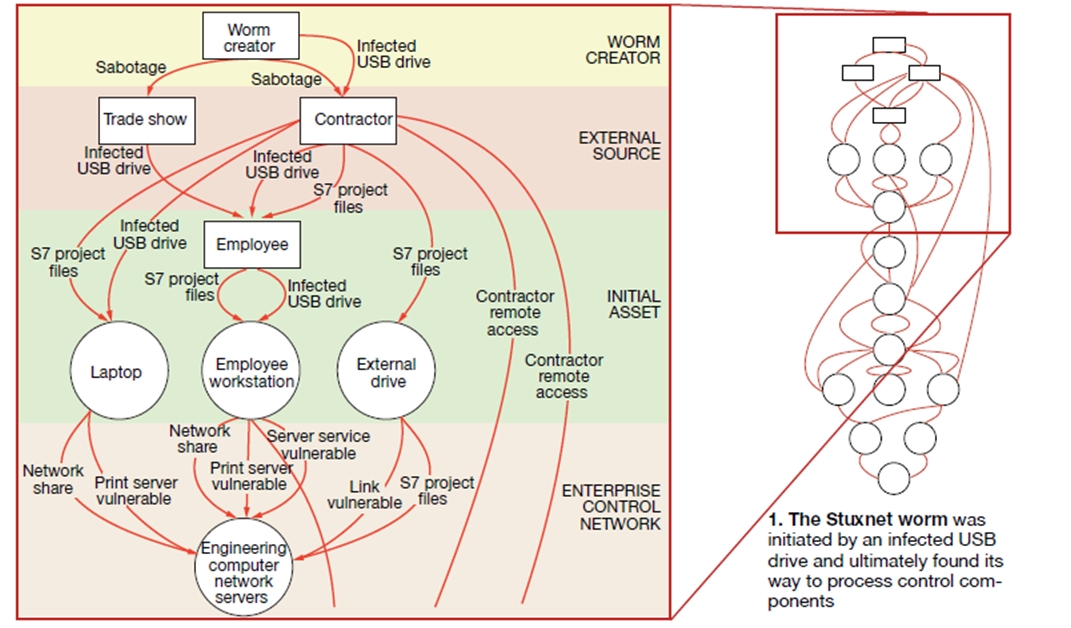

The now infamous Stuxnet worm infected critical energy facilities by way of a USB port (Fig 1). Attend a cybersecurity conference or pour through the presentations and you might start fortifying your backyard bomb shelter. Here’s a good example: “13 Ways Through a Firewall,” by Andrew Ginter, director of industrial security, Waterfall Security Solutions Ltd, presented at the DHS Industrial Control System Joint Working Group Conference last fall. Or this one, presented at the same conference: “Certificate Authorities and Public Keys: How They Work and 10+ Ways to Hack Them,” by Monta Elkins, security architect, FoxGuard Solutions®.

What’s the real threat?

Despite these incidences and scares, combined-cycle managers and staff could, perhaps, be forgiven if the cybersecurity threat is not their foremost thought. After all, it appears that many combined cycles may not have to comply with NERC Critical Infrastructure Protection Standards (CIPS).

The latest revision of CIP-002-4 states that plants under 1500 MW may be exempt (although there are other criteria that could ensnare your facility, so don’t get smug). And even if you do fall under the standard, there are ways to change that. For example, replacing routable protocols for communication to and from your facility with serial protocols may take your plant off the “endangering the grid” list.

Regardless, you need to stay tuned to NERC’s latest requirements, because the criteria for determining which plants are “critical assets” are hotly contested.

The cybersecurity envelope is expanding to include control-system and communications reliability issues, which are not the same as threats from the “bad people.” Consider this definition, discussed though certainly not unanimously agreed upon, at the ICS Cybersecurity Conference 2012, held in Norfolk, Va, last October: “Electronic communications that impair machine operation.”

It becomes difficult to treat cybersecurity as something special if it morphs to include everything that might go wrong at a power station. After all, reliability is, arguably, jobs one, two, and three at every plant. In many ways, cybersecurity is simply a subset of reliability. Let’s face it, the plants that suffered significant downtime between restarts because of a non-cybersecurity issue didn’t make the news.

Then there are the mountains of paperwork and documentation, and the different standards and guidelines emanating from multiple sources, including: DHS, National Institute of Standards Technology (NIST), NERC, FERC, EPRI, ISA, International Electro-technical Commission (IEC), DOE, and, for a nuclear plant, NRC. Even Congress almost got into the act, which tried, but failed, to pass the Cybersecurity Act of 2012.

According to cyber and control-system experts, none of these standards includes metrics to which you might design your cybersecurity solutions, or aim your strategy. When there is no definition of “secure,” all you can do is say, impractically, “more is better.” Finally, isn’t it the vendor’s problem after all? People who run combined cycles typically are not computer programmers.

On the other hand, the cybersecurity threat can be seen as an opportunity to view your facility in a different way. If there was ever a reason to manage the cyber-, or digital, assets of your plant differently from the physical assets, surely cybersecurity is it.

In other work, I have referred to this distinction as the brains and the brawn. Your steam turbine/generator is brawn. Your DCS, your data historian, are brain. With either, if something disrupts your ability to generate the requisite megawatts when called upon, your ability to make money, then whatever the cause, you don’t want it to happen again.

It might also be useful to understand what, exactly, is driving cybersecurity and, in particular, the expansion of its definition. One of these drivers is regionalization of our electricity infrastructure. ISOs and RTOs are regional grid and market authorities. In many ways, they function as regional utilities focused on transmission, although two of them, Cal ISO and New York ISO, are bounded by the state, and a third, ERCOT, serves most of Texas.

Since there are no regional governments, the federal government—especially since 9/11, the devastating Northeast blackout of 2003, and subsequent catastrophic outages caused by Mother Nature or human nature—has carved itself a big role in safeguarding the nation’s electricity system. NERC is often called FERC’s “cop on the beat” when it comes to cybersecurity and reliability.

Finally, cybersecurity is one huge unintended consequence of the drive to make control and information systems more open and less proprietary; use commercial off-the-shelf (COTS) components; break down the information silos and islands of communication; streamline control and automation, diagnostics and monitoring, enterprise information management, and wired and wireless communications; and reduce costs.

Fortunately, the cybersecurity threat can be contained by following these eight basic rules of engagement:

1. Compliance is not protection. Compliance involves policies, procedures, documentation, incident reporting, fines, and enforcement. Your corporate guys may have dotted all the eyes and crossed all the tees on the paperwork, and ticked off all the boxes, but that doesn’t mean the operation of your plant is free from threats. However, it appears that the next version of NERC CIPS, Version 5, expected to be approved by FERC this spring, will focus more on security than compliance. Compliance with NERC CIPS V.5 is scheduled for July 2015.

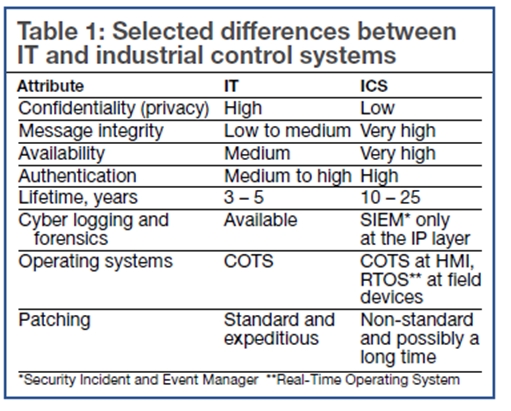

2. IT isn’t the same as OT. Most of the cybersecurity analyses and solutions have come from the information technology (IT) side of the house, say powerplant cyber experts, but these vendors are not necessarily the best equipped to handle the potential threats to the operational technology (OT), or industrial control systems (ICS), in the plant. Common distinctions, offered by well-known cybersecurity expert Joe Weiss, Applied Control Solutions Inc, and brains behind the ICS Conference mentioned earlier, are shown in Table 1.

However, it is also important to acknowledge the commercial rivalry between the suppliers of OT systems to the plant, and the suppliers of IT systems that connect the plant to the owner/operator enterprise. The latter includes the ISO/RTO or dispatch center, vendor service and support organizations, environmental compliance monitors, and others, in what has come to be known as the meta-organization.

However, it is also important to acknowledge the commercial rivalry between the suppliers of OT systems to the plant, and the suppliers of IT systems that connect the plant to the owner/operator enterprise. The latter includes the ISO/RTO or dispatch center, vendor service and support organizations, environmental compliance monitors, and others, in what has come to be known as the meta-organization.

3. Guard the perimeter. The physical boundary of the “brawn” is easy to discern. The electronic or digital perimeter is not. With wireless communications, personal digital assistants (PDA), cell phones, and communication networks everywhere, the perimeter is boundless. There may be a fence around the substation, but the remote terminal unit (RTU) serving it likely is a critical entry point for a cyber-attack. Given that the substation is the link between your facility and the grid, it should be a high priority for your cybersecurity strategy.

4. Air gaps lead back to the dark ages. Many cyber experts advocate for more “air gaps,” or electronic/digital disconnects. While theoretically, this can improve security by minimizing digital entry points, air gaps also sacrifice the interconnections which make digital systems so efficient in transmitting and propagating information to devices and workers.

One DHS report notes that the average energy management, SCADA, or plant control system has close to a dozen connections to outside networks. And that doesn’t include the networks used by each authorized worker who could access the system at one point or another.

Another problem with air gaps is that workers will defeat them by carrying data across the gap with portable storage media. Some experts suggest alternatives that, while complex, offer life- cycle flexibility and a true solution rather than incremental fixes, patches, and relentless password and validation checks (see sidebar).

5. Get beyond the HMI (human machine interface). Chances are, the cybersecurity threats you aren’t thinking about are lurking in the programmable logic controllers (PLCs), RTUs, and I/O terminals in the plant. Every computer chip or card receiving or transmitting electronic/digital signals to another device is a potential point of malicious or accidental entry. Also, employees and their PDAs and cellphones, sardonically referred to as “bring your own device” (BYOD) by cyber-experts, have to be considered points of entry. Employee PDAs should be secured, or separated from critical networks.

6. Think about the entire supply chain. Unfortunately, every line of computer code is an opportunity for malicious entry into the system, say cyber-experts. Consider the supply chain for your digital system—where the hardware components are sourced, where the programmers reside, who puts the system together, who services the system, and so on.

Cyber specialists note that while vendors on the IT and OT sides are designing and offering solutions, system integrators are the missing link. Just like an EPC contract with warranties creates one entity responsible for seeing that the plant design meets performance criteria, it may be inevitable that one entity becomes responsible for the cyber health of your digital assets.

7. Think about critical assets, even outside of NERC CIPS. When conducting a reliability-centered maintenance (RCM) program, you (1) identify those components that can really cause trouble, (2) pay close attention to them, and (3) allot them a greater part of the maintenance budget. The same should be true for cybersecurity. One caution: Don’t blindly accept vendor designs that mix safety logic and control-system logic—they may save money but are by no means a cybersecurity best practice.

8. Inventory your cyber assets .In addition to guarding the digital perimeter, creating a thorough inventory of your cyber assets, and organizing them, is a pillar of a cybersecurity management program.

Embedded digital devices

It’s critical to place what you hear and read about cybersecurity into context. For example, at the ICS Conference referred to above, experts noted (1) PLCs are especially easy to overwhelm because the code is relatively simple, and (2) system integrators are the missing link when it comes to designing and offering solutions.

One expert specifically mentioned that Rockwell Automation’s PLCs are priority targets for hackers. On the one hand, this makes sense because PLCs are COTS components specifically designed for ease of programming by end users and integrators. In addition, Rockwell is recognized as a PLC supplier that relies on its extensive and capable network of integrators.

Part of the context also is that PLC-based automation systems are now competing with distributed control system (DCS) architectures for core plant functions. Traditionally, powerplants have used a DCS for the core process, the gas and steam turbine/generators, and HRSGs—and PLCs for auxiliary sub-systems like water treatment. Today, gas-turbine and combined-cycle systems are relying more and more on PLC-based automation.

Thus, some cybersecurity issues may be at least partly derived from commercial rivalries among PLC and DCS suppliers (although the distinction between the two has blurred) and among OT system suppliers and IT system suppliers.

Inventory

It’s one thing to create an inventory of cyber assets around critical infrastructure. It’s another to keep it up-to-date. Maintaining the accuracy and currency of any database is difficult. For digital automation systems, it all comes under the rubric of change or configuration management.

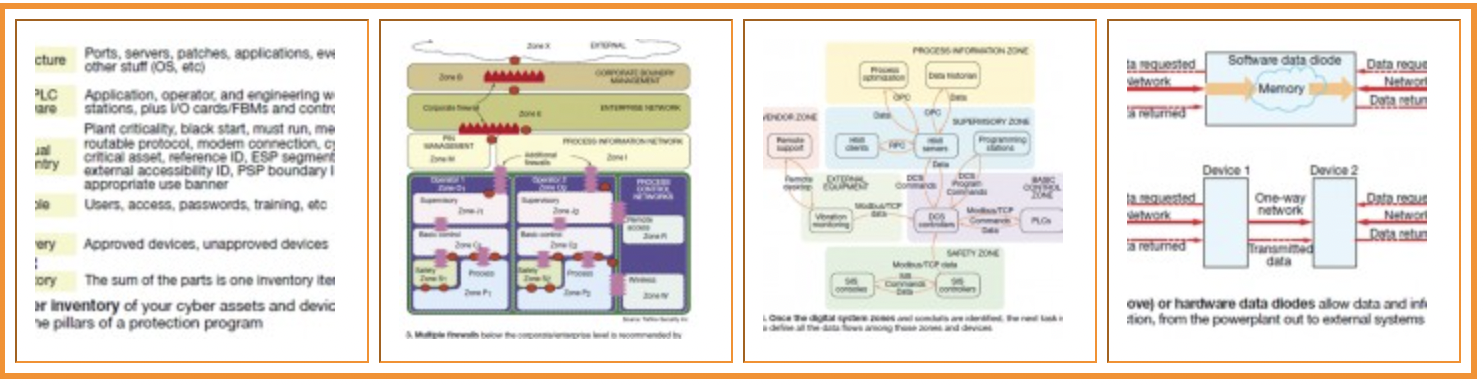

PAS Inc, Houston, which has built cybersecurity compliance solutions for a large owner/operator with multiple assets across a broad region, suggests the following categories for building a complete inventory: Infrastructure, DCS/PLC hardware, manual data entry, people, and discovery (Fig 2). Each of these categories, in turn, possesses certain attributes that can affect the health of the cyber asset, and therefore the ability of the component, as part of a critical asset, to function properly. It may look straightforward on paper, but now think about every component in your plant that can be considered a digital “point of entry.”

Guard the perimeter

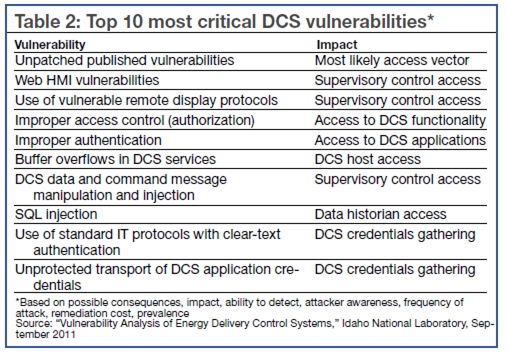

Cybersecurity experts and government officials insist that bad guys are continually poking digitally at the perimeter seeking malicious entry (Table 2). Sometimes they get in. But after a review of the public information on powerplant cybersecurity, it appears that avoidable losses boil down to rather mundane, common-sense strategies. For example, preventing an authorized person from inserting an infected memory stick into the wrong port would have prevented the cyber-incident referenced at the beginning of this article.

Cybersecurity experts and government officials insist that bad guys are continually poking digitally at the perimeter seeking malicious entry (Table 2). Sometimes they get in. But after a review of the public information on powerplant cybersecurity, it appears that avoidable losses boil down to rather mundane, common-sense strategies. For example, preventing an authorized person from inserting an infected memory stick into the wrong port would have prevented the cyber-incident referenced at the beginning of this article.

So, there needs to be a way of verifying that any portable storage device is safe before it is inserted into a USB port or a disc drive. Such devices can be scrubbed before insertion or so-called media designed for a single use can be more widely deployed. As in the RCM analogy, obviously it is more critical to ensure this for a plant operator’s control console, or a PLC or I/O port at the gas turbine, than it is for a plant engineer working remotely through a PC with off-line data.

Passwords are a similar issue. Experts suggest replacing role-based passwords (example: plant engineer) with personal passwords for each potential user. It may be a nuisance to constantly change passwords, or make them longer, more complicated, and more defensible. But it is also a nuisance to pass through a security machine whenever you board a plane, visit a government office facility, or even have a meeting at a major New York bank headquarters. But we’ve learned to live with it.

Patch management is a third element of guarding the perimeter. As everyone knows from their PC experience, software suppliers regularly deliver patches and anti-virus protections as they are developed to counter known threats. OT software vendors now do the same thing. However, this can be dicey because vendor security solutions often incur safety impacts—especially if legacy software is still being used in other parts of the system. You may have a great HMI screen but the controllers underneath may still be running Windows 98.

The other major problem with patch management, according to ISA standard TR-62443-2-3, “Security for Industrial Automation and Control Systems: Patch Management in the IACS Environment” (formerly ISA 99) is that patches typically are rolled out during scheduled maintenance outages.

The timeline for outages isn’t the same as the criminal’s timeline for injecting malware or the urgency to address software bugs. Some digital systems used at power stations are old and not well supported by vendors. Patches may simply not be a solution in many cases. Finally, patches themselves may be corrupted, or adversely impair the operation of the software it is intended to correct.

Robust, more permanent

A permanent solution to cybersecurity is probably a pipe dream. No one has figured out how to stop crime. But it does seem clear that the cyber community is moving towards solutions that are more robust, more permanent, and less dependent on patches, digital pat downs, and passwords.

For example, Torfino Security Inc suggests dividing up the digital system into zones, identifying all conduits between and among zones, and then defining the data and information flow through those conduits. Additional firewalls can be established below the enterprise and corporate firewall levels (Figs 3, 4).

A third device for protecting the perimeter is called the data diode (Fig 5). As the name implies, the data diode allows data to be transferred in only one direction, or uni-directionally. It is inserted between a source of data at the plant control system and the computer system or network, and can be hardware- or software based. The hardware diode may be the more secure approach because a software diode can be accessed from the computer, or remotely, and “converted” into a bi-directional pathway.Another appliance is called a universal threat manager. At least one powerplant cyber expert at the ICS Conference stated that he was a “big fan” of UTM. It is described as a special type of firewall combining packet inspection, network anti-virus protection, in-line network intrusion detection sensor, intrusion prevention system, and built-in authentication mechanisms. An example location would be the conduit between the plant’s data historian and the corporate or enterprise information network.

According to Barry Hargis, Engineered Solutions Inc, in a presentation at the 55th ISA Power Industry Symposium, a hardware data diode completely protects the powerplant control system from a cyber-attack while still allowing data to flow out of the system. However, diodes involve additional costs, they create latency (delays), in data transmission between the control level and the computer system, and they may not be appropriate for all data sources—such as for protective signals, controller-to-controller signals, etc.

Consider ‘virtual isolation’ for plant security

In a relatively new concept, Lee McMullen, cybersecurity specialist at Hurst Technologies Corp, Angleton, Tex, suggests employing a virtual-machines architecture to manage cybersecurity without sacrificing the productivity gains inherent with information sharing through the meta-organization from an integrated digital automation system. Think of a virtual machine as a software program emulating a physical computer that runs on the host computer. Depending on the power of the host computer, it can run multiple virtual machines.

The virtual isolation concept uses a host computer configured with a minimum of two virtual machines. The first virtual machine is outside the plant system connection and communicates with the corporate or other non-process networks. The second virtual machine, on the inside, connects to the process systems. The host computer manages and monitors both virtual machines and acts as a data and communication isolator.

On the inside, the virtual machine receives data from the legacy systems and transports data through the host to the outside virtual machine. To enhance security, the host computer monitors all transactions and maintains an auditable transaction log. Data requests and limited control commands from the outside virtual machine are submitted to the host computer where they are verified for security authorization and screened for malicious content then passed to the inside virtual machine.

To maximize flexibility and further enhance security, each virtual machine and the host computer’s operating systems utilize separate, dedicated disk drives and network cards within the host computer. The custom hardenings of each virtual machine, claims McMullen, allows the most efficient and secure configuration method possible for connecting multiple networks using a single physical machine.

Standard hardening methods include network isolation, port control, and various secure communication schemes. These methods should be applied to each virtual machine as required by their connected networks. One option that is unique to the virtual machines is the capability to run different operating systems within the virtual isolator to maximize security.

The virtual machines and their isolated network connections support custom applications that provide a method of passing data from the legacy process system communication network to the corporate network, as well as passing commands and data requests to the process network.

There are three levels of security in this configuration:

- Network connectivity on the corporate side is limited to only the specific ports necessary for passing information requests to and from the outside virtual machine.

- The receiving application only accepts information request messages previously identified in a control database on the host computer. This host database is not accessible to either the enterprise or the process networks, only working within the host computer.

- Communication and security configurations are unique to each virtual machine and the host computer. This ability to tailor the configuration for each application and legacy system allows the greatest possible level of integration and security while reducing overall complexity and cost.

A user outside the plant system submits a request for information to the enterprise virtual machine either through a web interface or through a dedicated client. The user request is logged to the enterprise virtual machine’s database where it is parsed to determine if it is a valid request. The validity of the request is verified using standard, current security processes. Once validated, the host computer extracts the desired information from the process database and returns the data to the enterprise virtual machine in a temporary file.

Control function requests are passed in the same manner. The desired function is received by the enterprise virtual machine and then is evaluated by the host computer. If the requested function is valid and the security of the requestor properly verified, the host will pass the instruction on to the process virtual machine for execution. The process virtual machine will receive the control instruction then evaluate the instruction against the current state of the process system.

If the instruction passes all these checks, the process system executes the command. If it fails, the control instruction is rejected and the requestor notified. This introduces a delay for control but provides a secure method for passing a control command from outside of the process network.

Post-mortem incident analysis

A visceral presentation given by a powerplant engineer at the ICS Conference illustrates many of the conflicting issues in cybersecurity.

A 1980s-vintage coal-fired plant with 400-MW units had to replace its legacy control system. The system in place now includes 4500 I/O points, Ethernet communications, boiler and turbine control, burner management system (BMS), data acquisition system, data historian, OPC (Object Linking and Embedding for Process Control), relay logic systems (PLCs), sequence-of-events (SOE) recorder, annunciator with pan alarm windows, and other pieces and parts. This information now comes through one HMI. The data historian (third party) has a hard connection into the DCS.

In the meantime, the OPC vendor had to supply a patch for the FTP (file transfer protocol) server associated with the data historian. Here, Microsoft middleware is used for machine-to-machine communication. To insert the patch, the plant had to be “shut down and cold,” according to the presenter.

After final tuning of the new DCS, the plant was ready to start up. Operators fired up the boiler. Not long after, trending of the I/O points slowed down, then, much to everyone’s consternation, stopped working. The application processor was no longer updating the HMI. All-important trends in the BMS were not visible. Operators had to trip the unit.

Unfortunately, the startup sequence continued in cruise control but operators had no control capability and no view into the vendor’s “fault tolerant” computers. No alarms came on at the HMI (although alarm signals were apparently available at the processor level), because the alarm management system was embedded in the HMI.

The problem of controllers talking to each other with no operator visibility was not experienced during factory acceptance testing of the DCS. The plant waited an hour for the vendor to respond and reset the process. In the meantime, the plant had achieved 60% MCR.

Fortunately, control was restored before a catastrophic event occurred. The root cause proved to be redundant OPC servers corrupting the data from the DCS because they could not correctly handle time-series data and were losing the SOE recorder data.

Here are some of the takeaways offered by the presenter. While this was not a “bad guy” cyber-incident, it met the broader definition of an “electronic communication between systems affecting confidentiality, availability, reliability, or other performance attributes.”

- OPC is “black box” software code and you need to understand the “guts of it,”—the original platform it was built on.

- Vendors consider their software code proprietary and resist the notion that customers must see it. In fact, customers are specifically prevented from this knowledge when they sign a software user agreement.

- Vendors must provide root-cause analysis that is acceptable to the customer.

- Field IT has to be designed to a different standard than corporate IT. In this case, a simple network upset had vast potential consequences.

- Remote vendor log-in is a potential portal for cyber-threats—the plant doesn’t necessarily know the vendor’s people at the remote help site.

- You cannot divorce change or configuration management processes from cybersecurity processes and procedures.

- Automation systems do not “plug and play” the way you might think.

- Digital systems may have become so complex, no one person can know how they work or which vendor is responsible. CCJ