It’s time to think differently about cybersecurity. As was famously noted in the classic movie, Cool Hand Luke, you have to “get your mind right” and accept some fundamental principles, if not truths.

- You are never done. Sure, you can comply with a regulation or standard, but compliance isn’t security, perhaps the most frequently repeated observation in all recent cybersecurity discussions

- Fear sells. The fact is, the unintended consequence of all-pervasive digital systems in daily and work life is a continuous and escalating threat environment, all the time, everywhere.

- The free-flowing network is dead. The old dream of fully open digital systems in which data are freely propagated and moving continuously among connected devices and people has contributed to today’s cybersecurity nightmare. Segmentation is the new theme. Imagine the opening of the old TV show, Get Smart, and actor Don Adams proceeding through gate after gate with the door to the previous one slamming behind him.

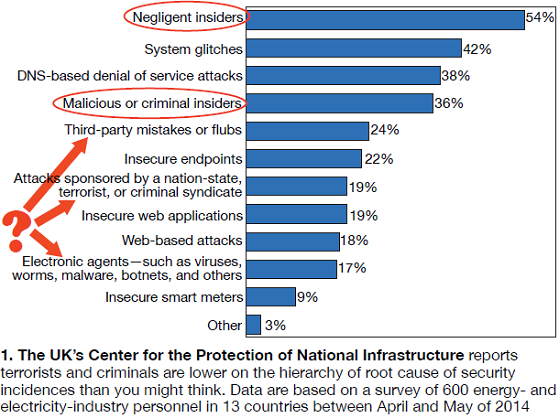

- The larger threat is the one you don’t want to think about. Trust is paramount to getting things done in the work world. You have to trust your team, your squad, your employees, etc. But most of the post-incident assessments to date show that a disgruntled employee or an unintentional mistake is more likely to cause cyber problems than a terrorist half a planet away (Fig 1)

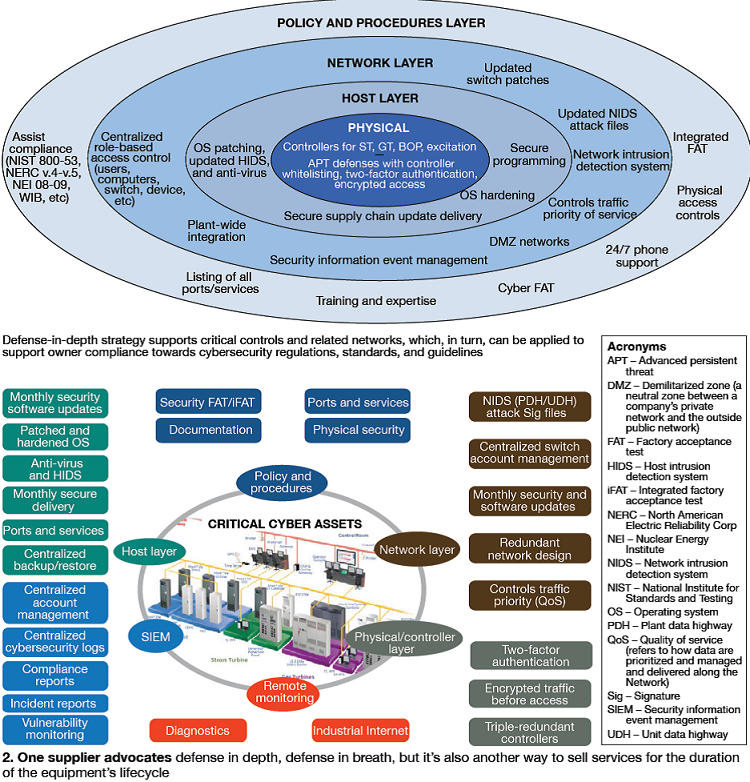

- Your threat is someone else’s opportunity. Specifically, suppliers of digital devices, cybersecurity solutions providers, consultants, and even the government benefit from the escalating threat and fear environment (Fig 2). Let’s face it, there’s a reason why every high- media-profile cybersecurity event is associated with an axis-of-evil type country.

With these truths, perhaps it is more productive to think about cybersecurity as part of your safety culture, your maintenance practices and procedures. It’s all about how you and your organization perceive and mitigate risk, and how much you are willing to pay for it. These days, most owner/operators mitigate risk by transferring it, as best as they can, to someone else. From the plant staff perspective, that someone else, more often than not, is the control system vendor or the corporate engineering or IT/OT staff, depending on the size of the owner/operator.

A presentation by a major Midwest utility at the CTOTF Fall Conference last year illustrates some of these principles listed above. After all the usual verbiage you read in cybersecurity presentation slides, these are the important points for plants: Data diodes, a popular and generally inexpensive digital security device, were installed for critical large generating assets to allow only one-way, real-time digital data communication from the plant to the corporate network; the owner worked with its controls vendor during a retrofit of a peaking facility with multiple LM6000s to “harden” the digital systems; anti-virus and intrusion detection devices require constant updating and patches; and systems are tested frequently for vulnerabilities.

The challenges listed in the last slide of the presentation are managing remote access and remote operators, handling transient devices like USBs and external hard drives, and what to do about aging controls and HMIs. Most cybersecurity presentations at the industry level suggest that the really significant cyber events are caused by the transient devices and older devices, especially programmable logic controllers (PLCs), are most vulnerable to intrusion. Looking forward rather than back to legacy and aging controls, wireless digital systems present a whole new level of cybersecurity challenges.

What has happened in the industry thus far with respect to cybersecurity is the equivalent of educators “teaching to the test” rather than teaching their students real knowledge and how to think. In other words, most owner/operators are tying up resources complying with NERC CIPS and doing their utmost to avoid an “audit,” but that’s not the same as independently assessing your risks and mitigating them.

The CTOTF cybersecurity session was opened by its leader with the provocative slide, “Defining the digital footprint: Why all the fuss about cybersecurity?” Indeed, in another presentation in the session, a slide asks the audience if they are familiar with the following now infamous cyber-events: Stuxnet, 2010; Aurora, 2007; Slammer, 2003; and Shamoon, 2012. Yet another slide from the session shows that the Industrial Control Systems Cyber Emergency Response Team (ICS-CERT) reported 256 cyber-incidents in 2013 with more than half in the energy sector. This begs the question, how severe were these incidents? What constitutes a required or voluntary reporting scenario?

If the industry is still fixated on these four events (and one of them was staged by the government as a test), and the severity of these reported incidences isn’t known, then maybe the opening question is valid. What IS all the fuss about, beyond a host of costly new regulatory compliance actions? More specifically, how do you credibly quantify the risks at a given facility, and get beyond the background noise and fear?

Of course, you can’t ever be sure. No one tallies and reports the events that were prevented. You don’t see motivational posters with phrases like, “245 operating days since a lost-time cybersecurity event!” Also, much that goes on in the cybersecurity arena is confidential to prevent owner/operators from embarrassment and the wrong people gaining access to the information.

One control systems vendor representative offered the CTOTF attendees a tutorial on measuring and mitigating risk, mostly focused on ranking your digital assets on their risk exposure and then translating that into exposure loss numbers, much like an insurance evaluation or a reliability centered maintenance (RCM) assessment. His slides reveal that the places to focus your mind are on workstations, operations work places, and field controller devices. Interestingly, most of the financial liability comes in the form of government fines and judgment penalties, according to a separate slide.

Finally, a likely consequence of cybersecurity will be that powerplants defined as critical (a “high” designation) under the latest NERC CIPS regulations may begin to resemble nuclear plants in terms of security. For example, nuclear plants make extensive use of contract labor from “preferred” top-tier trusted outsourced providers who are certified and trained in the policies and procedures of the specific plant or owner/operator.

As cybersecurity standards require site employees, contractors, and some corporate employees to undergo regular training, verification, documentation, and reporting requirements, it will just be easier and less costly to limit access at all critical plants to “trusted” entities and “privileged insiders.” Open access will no longer exist. Each member of an organization will have a list of privileges and digital system access points that will have to be constantly reviewed, updated, and revised. Human interaction with digital devices will be on a strict “need to know” basis.

Another likely consequence is that the notion of software “functionality” will change. It turns out that all the functionality—the bells and whistles—software suppliers love to include, but is often not used or even desired, represents vulnerability points, especially if they are not deliberately disabled. This change also supports the notion that control-system suppliers will have a greater role in the lifecycle management of their products for powerplants. Software will have to become less “off the shelf” and more customized for every customer. That will certainly raise costs.

Although most government and national lab documents on cybersecurity for energy systems read like bad acronyms chasing worse ones, Cybersecurity Procurement Language for Energy Delivery Systems, issued by the Energy Sector Control Systems Working Group (under the auspices of Pacific Northwest National Laboratory, PNNL) is highly readable and does a good job explaining how to get your mind right about specifying control system hardware to work toward true cybersecurity, not just compliance. CCJ